Shortened roundup this week. I may keep to this format going forward as I think the longer writeups I was doing are better suited for my weekly articles. I’ll link some other good AI roundups for you to check out below if you want more. Also check out my longform post for this week, The Promise and The Peril of Agentic AI, on Auto-GPT and the broader emerging trends.

Top Ten News o’ the Week

An AI-generated song “featuring” AI-imitations of Drake and The Weeknd goes viral on TikTok before it was pulled down via a copyright claim. Can you copyright claim AI-generated content? Who knows! Lawyers will have fun with this.

Stability AI comes out with StableLM, its own suite of LLM’s. These add to the “freely available via opensource options.”

Amazon came out with its own suite of services. First, AWS Bedrock is a new hosted service that makes several non-OpenAI LLM’s available: Anthropic’s Claude, AI21’s Jurassic-2, Stability’s StableLM/Stable Diffusion, and a new Amazon LLM, Amazon Titan. Second, new instances specifically designed to minimize training and inference costs. Finally, general availability for Amazon CodeWhisperer, a GitHub Copilot competitor.

Microsoft has been developing its own AI hardware chip called Athena since 2019. This is a big deal if MS can pull this off; Google has a backend advantage due to their custom TPU design, but hasn’t been able to exploit that advantage yet. If this works, that advantage goes away.

Reddit announces plans to charge companies training AI datasets from its content. I think you’ll see a lot more of this.

Epic Health Systems/Microsoft partnership on AI usage for electronic health records. I think this is an amazing use case; a huge chunk of a typical medical office workload is formulaic. There’s criticism here about bias and false information, which are valid concerns but mitigatable.

Good NYT reporting on Google’s “Project Magi,” which is the latest iteration of their attempts to integrate AI features into current offerings. Internal “panic” over the perception that they are behind; reportedly Samsung is threatening to switch their default search provider to Bing, though that may just be a timely negotiation tactic.

Sam Altman: GPT-5 is currently not being trained, and won’t be for some time. Also, LLM size will matter less going forward. I think this is true, up to a point.

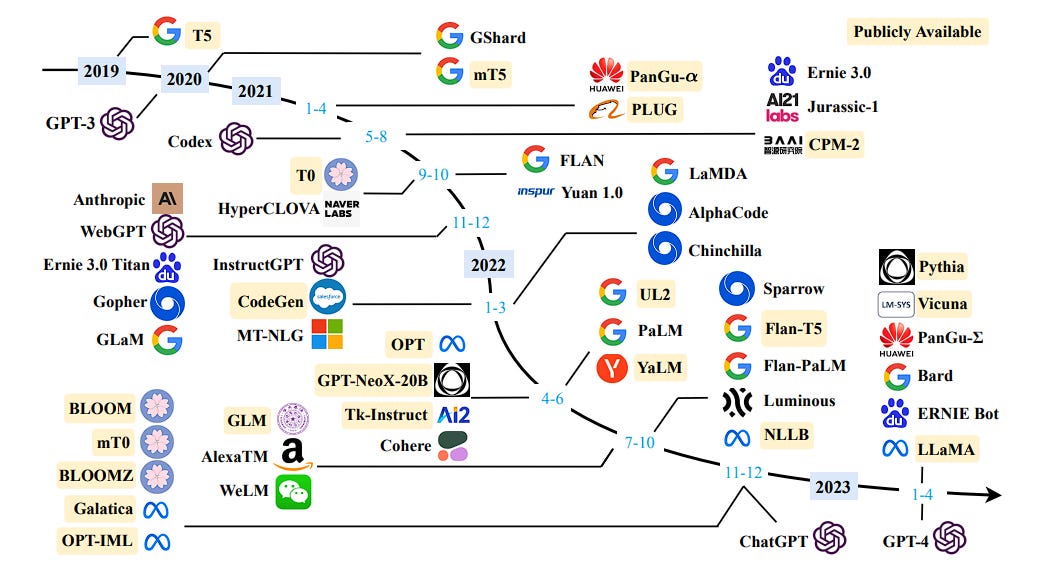

“A Survey of Large Language Models.” Super great paper that is an excellent literature review on LLM’s (image is excerpted at the top of this article).

New York Times article, “35 Ways Real People Are Using A.I. Right Now.” (non-paywall gift article). Kind of random, but it shows the engagement that AI is already getting.

AI Roundups Recommendation

Daily: I’ve tried a few, but Ben’s Bites so far is the best daily AI roundup I’ve seen. Others that are good but more hype-y: The Neuron, Bot Eat Brain.

Weekly: Besides this humble offering, of course :) There are tons of great ones I’ve been reading:

Andrew Ng’s The Batch (best general-purpose roundup)

Jack Clark’s Import AI (more policy focused)

Carlos’s Data Machina (more technical)

Zvi Moshowitz’s Don’t Worry About the Vase (not just AI, more AI-Safety focused)

Daniel Miessler’s Unsupervised Learning (AI+Security+broader tech things)

Monthly: Sebastian Rachka’s Ahead of AI is fabulous for its technical depth.

In Closing

Guess the Founders were more advanced than we thought…

Have a good weekend!

Standard disclaimer: All views presented are those of the author and do not represent the views of the U.S. government or any of its components.